Hello, my name is Jeffrey Lonan, currently working as a DevOps Engineer in HDE, Inc.

This post is based on my presentation during HDE's 21st Monthly Technical Session.

Containers, Containers Everywhere!

As DevOps practitioners these days, containers are a part and parcel of our working life. Developers use them to test their Applications in their efforts to quash every last (major) bugs in their app. Operators use them to test their infrastructure configurations in their quest to create the ultimate “Infrastructure-As-A-Code” setup, or even deploying and running containers as part of their infrastructure.

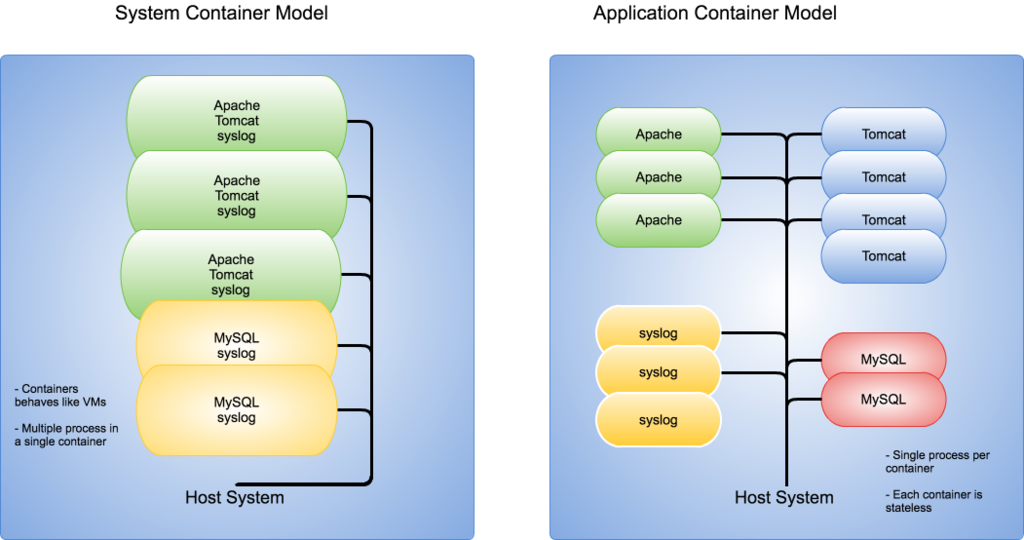

One of the most well-known container solution out there is Docker, as well as the up and coming rkt. These are Application Containers, whereby the design philosophy is to reduce a container as much as possible to only run a single process, ideally the application we want to run, and package it into a portable container image, which then can be deployed anywhere. Some application container providers (e.g. Docker) have an extensive toolset to make it easier to build & customise container images and deploying them into the infrastructure.

There are advantages and disadvantages concerning the Application Container philosophy, but we will not dwell on it in this post. Instead, let's get into a different way of running containers!

System Containers

Enter LXC . LXC is a System Container, which means that it is a Container that is designed to replicate closely a Bare Metal / VM host, and therefore you can treat LXC containers as you would treat a normal server / VM host. You can run your existing configuration management in these containers with no modifications and it will work just fine.

LXC started as a project to utilize the cgroups functionality that was introduced in 2006, that allows separation and isolation of processes within their own namespace in a single Linux Kernel. Some of the features in LXC includes

- Unprivileged Containers allowing full isolation from the Host system. This works by mapping UID and GID of processes in the container to unprivileged UID and GID in the Host system

- Container resource management via cgroups through API or container definition file. The definition file can easily be managed by a configuration management tool

- Container rootfs creation and customization via templates. When creating a container with a pre-existing template, LXC will create the rootfs and download the minimal packages from the upstream repository required to run the container

- Hooks in the container config to call scripts / executables during a container’s lifetime

- API and bindings for C & Python to programmatically create and manage containers

- CRIU support from LXC 1.1.0 onwards (lxc-checkpoint) to create snapshots of running containers, which then can be copied to and re instantiated in another host machine

Our First Container

On a Linux system, you can use your favourite package manager to install lxc. For this example, let's use yum with CentOS 7

$ yum install -y lxc bridge-utils

the bridge-utils package is needed as the containers will communicate with the outside world via the host system's network bridge interface.

Assuming you have created a bridge interface called virbr0, let's proceed with actually launching a container! We will use lxc-create command will create the root file system (rootfs) for our container, using CentOS and named test01

$ lxc-create --name test01 -t centos

Host CPE ID from /etc/os-release: cpe:/o:centos:centos:7

dnsdomainname: Name or service not known

Checking cache download in /var/cache/lxc/centos/x86_64/7/rootfs ...

Cache found. Updating...

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: ftp.iij.ad.jp

* extras: download.nus.edu.sg

* updates: ftp.iij.ad.jp

No packages marked for update

Loaded plugins: fastestmirror

Cleaning repos: base extras updates

0 package files removed

Update finished

Copy /var/cache/lxc/centos/x86_64/7/rootfs to /var/lib/lxc/test01/rootfs ...

Copying rootfs to /var/lib/lxc/test01/rootfs ...

Storing root password in '/var/lib/lxc/test01/tmp_root_pass'

Expiring password for user root.

passwd: Success

Container rootfs and config have been created.

Edit the config file to check/enable networking setup.

The temporary root password is stored in:

'/var/lib/lxc/test01/tmp_root_pass'

The root password is set up as expired and will require it to be changed

at first login, which you should do as soon as possible. If you lose the

root password or wish to change it without starting the container, you

can change it from the host by running the following command (which will

also reset the expired flag):

chroot /var/lib/lxc/test01/rootfs passwd

So our container rootfs has been created! Note that LXC will auto generate the SSH password for first-time login. Let's start our container using lxc-start and check for running containers with lxc-ls --active

$ lxc-start -n test01 -d $ lxc-ls --active test01

Our first container is up and running! We can access the container using SSH with the auto-generated password. If we are not sure about the IP address, we can also use the lxc-attach command to gain access to your container and do whatever is needed with your container (e.g. Installing packages, set IP address etc.).

$ lxc-attach -n test01 [root@test01 ~]# cat /etc/hosts 127.0.0.1 localhost test01

Our first container was created and instantiated using default settings, but default setting is no fun! At some point in time, we would like to customise our containers, so for this, we would need to create the container configuration files.

LXC Container Configuration

By default, the container config file will be created in the path you specify when running lxc-create, of which the default value is /var/lib/lxc/<container-name>/config. Here is a sample of a container custom configuration file of container named lxc3

# LXC Container Common Configuration lxc.rootfs = /mnt/lxc3/rootfs lxc.include = /usr/share/lxc/config/centos.common.conf lxc.arch = x86_64 lxc.utsname = lxc3 lxc.autodev = 1 lxc.kmsg =1 # LXC Container Network Configuration lxc.network.type = veth lxc.network.flags = up lxc.network.link = virbr0 lxc.network.name = eth0 lxc.network.ipv4 = 192.168.99.21/24 # LXC cgroups config lxc.cgroup.cpuset.cpus = 0-1 lxc.cgroup.memory.limit_in_bytes = 1073741824 # LXC Mount Configs lxc.mount.entry = /opt/lxc3 opt none bind 0 0 # LXC Hooks lxc.hook.pre-start = /mnt/lxc3/setenv.sh

The configuration file above is used to define the container properties, like hostname, IP addresses, host folders to be mounted, among other things, using key-value pairs. We won't go into details on the container configurations here, so if you are curious about the configuration file, do check out the documentation.

LXC Python APIs

So, you've configured your container configurations and created your containers. So what next? Automate your container deployment and configurations? Of course!. I created a simple Python script for the previous MTS session to create and start a container, so let's check it out!

#!/usr/bin/env python

import lxc

import sys

import argparse

parser = argparse.ArgumentParser(description='Create a LXC container')

parser.add_argument('--name', help='Container name')

parser.add_argument('--create', help='Create the Container', action="store_true")

parser.add_argument('--start', help='Start the Container', action="store_true")

parser.add_argument('--stop', help='Stop the Container', action="store_true")

args = parser.parse_args()

#Set Container Name

c = lxc.Container(args.name)

#Set container initial configuration

config = {"dist":"centos","release":"7","arch":"amd64"}

#Create Container

if args.create:

print "Creating Container"

if c.defined:

print ("Container exists")

sys.exit(1)

#Create the container rootfs

if not c.create("download", lxc.LXC_CREATE_QUIET, config):

print ("Failed to create container rootfs",sys.stderr)

sys.exit(1)

#Start Container

if args.start:

print "Starting Container"

if not c.start():

print("Failed to start container", sys.stderr)

sys.exit(1)

print "Container Started"

# Query some information

print("Container state: %s" % c.state)

print("Container PID: %s" % c.init_pid)

#Stop Container

if args.stop:

print "Stopping the Container"

if not c.shutdown(3):

c.stop()

if c.running:

print("Stopping the container")

c.stop()

c.wait("STOPPED", 3)

You will need to install the lxc-python2 package using pip and import it to your script.

So, to create a container and start it up, I simply run the following

$ ./launch-lxc-demo.py --help usage: launch-lxc-demo.py [-h] [--name NAME] [--create] [--start] [--stop] Create a LXC container optional arguments: -h, --help show this help message and exit --name NAME Container name --create Create the Container --start Start the Container --stop Stop the Container $ ./launch-lxc-demo.py --name test01 --create Creating Container $ lxc-ls test01 $ ./launch-lxc-demo.py --name test01 --start Starting Container Container Started Container state: RUNNING Container PID: 689 $ lxc-ls --active test01 $ lxc-attach -n test01 [root@test01 ~]#

You can also get and set the container configuration via the API to update your container configuration programmatically. For more information on the APIs, please check out the documentation here

Conclusion

So we have gone through some of the more interesting features available in LXC. There are more features in LXC that cannot be covered by this post, so if you are interested for more information, please visit the official LXC website at Linux Containers. You can also check out the excellent blog posts by Stéphane Graber, the project lead on LXC, here.

As an Operator, I have used both Docker and LXC for testing and deploying infrastructure, and personally, I prefer LXC due to its VM-like characteristics, which makes it easy for me to run my existing configuration management manifests inside LXC.

I also enjoyed the flexibility afforded by the LXC configuration file, and in fact, I have used my configuration management tool to create & maintain LXC configuration files and launch customised container into the infrastructure. With new tools like LXD coming up, I am confident that LXC will find its place in DevOps, alongside Docker and rkt.